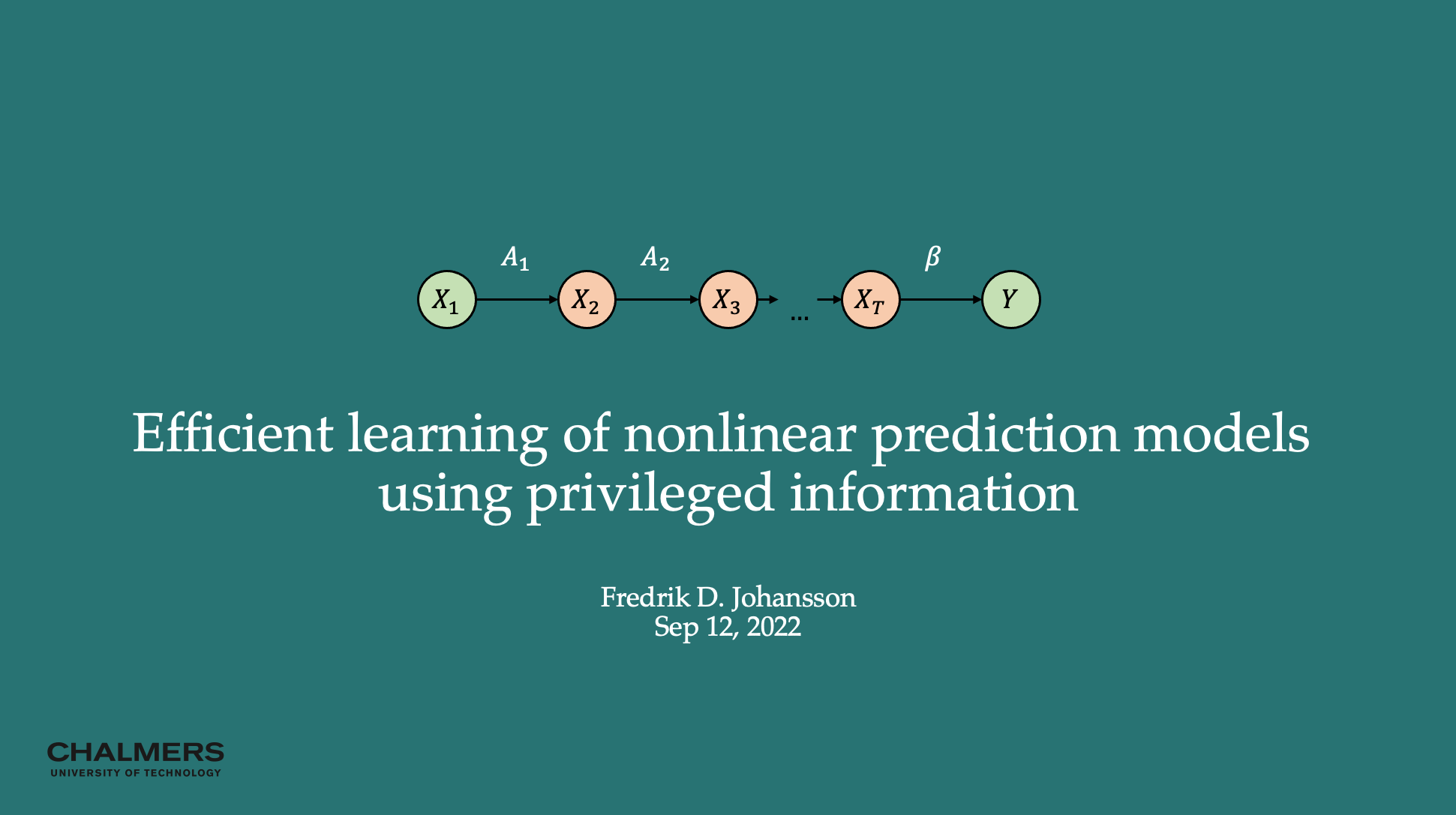

Seminar: Efficient learning of nonlinear prediction models with time-series privileged information

Chalmers machine learning seminars

Abstract

In domains where sample sizes are limited, efficient learning is critical. Yet, there are machine learning problems where standard practice routinely leaves substantial information unused. One example is prediction of an outcome at the end of a time series based on variables collected at a baseline time point, for example, the 30-day risk of mortality for a patient upon admission to a hospital. In applications, it is common that intermediate samples, collected between baseline and end points, are discarded, as they are not available as input for prediction when the learned model is used. We say that this information is privileged, as it is available only at training time. In this talk, we show that making use of privileged information from intermediate time series can lead to much more efficient learning. We give conditions under which it is provably preferable to classical learning, and a suite of empirical results to support these findings.