Practicality of generalization guarantees for unsupervised domain adaptation with neural networks

TMLR [Paper URL]

A survey of the field of unsupervised domain adaptation bounds show us that only a few of them are actually computable

In sensitive applications such as healthcare we are generally concerned with not only the performance of our models on training data; but also when we apply them to unseen data which might come from a different distribution. In these situations we generally do not have access to labeled data which puts us in the unsupervised domain adaptation setting. Generalization bounds in this setting are generally used for model selection or as inspiration for new algorithms. However, if we are interested in performance guarantees on out-of-distribution samples the literature is more sparse. We set out to remedy this by investigating if there are bounds which can satisfy three specific desiderata: computability, estimability and tightness.

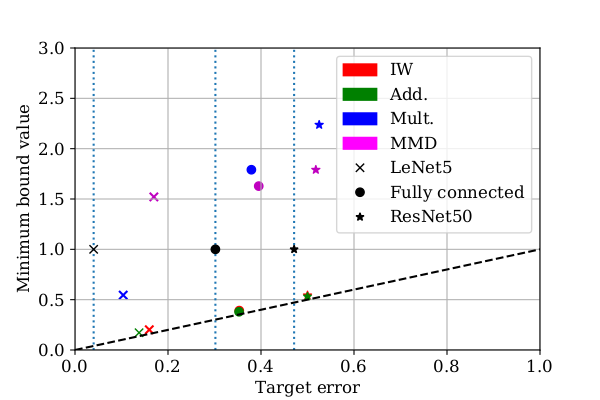

We survey the field of domain adaptation generalization bounds and find that most of the existing literature cannot satisfy these desiderata even under the strong assumptions of covariate shift and overlapping support. We identify bounds based on the PAC-Bayes framework as the most promising for achieving computable bounds. We compute and compare two bounds from the literature with two extension of previous work by McAllester [1] with importance weighting and MMD. If you are interested you are welcome to read more about this in our paper [2]. In it we compute several domain adaptation bounds based on the PAC-Bayes framework and investigate their tightness for different image classification tasks.

- [1] David A. McAllester. A PAC-Bayesian Tutorial with A Dropout Bound. arXiv e-prints, 1307: arXiv:1307.2118, July 2013

- [2] Adam Breitholtz and Fredrik Johansson. Practicality of generalization guarantees for unsupervised domain adaptation with neural networks. In submission, 2022.